Let me start with a statistic that honestly caught me off guard: 90% of professional developers now rely on open-source tools in their daily workflows, and the artificial intelligence software market in the supply chain alone is projected to explode from $7.19 billion to $30.81 billion by 2029. That’s a staggering 43.9% compound annual growth rate that tells us something crucial – we’re not just witnessing a trend, we’re living through a fundamental shift in how modern cloud infrastructure operates.

Here’s the thing, though: while businesses are scrambling to adopt cloud-native solutions, many development teams are still piecing together fragmented toolchains that create more headaches than solutions. The core problem isn’t a lack of tools – it’s the overwhelming abundance of choices without clear guidance on what actually works in production.

This affects everyone from solo developers building their first microservices architecture to enterprise teams managing complex Kubernetes deployments. Small startups find themselves drowning in configuration files, while established companies struggle with vendor lock-in and skyrocketing cloud costs. Marketing teams trying to leverage AI for supply chain optimization hit walls when their infrastructure can’t scale, and agencies managing multiple client projects face the nightmare of maintaining consistency across different technology stacks.

That said, there’s never been a better time to be a cloud-native developer. The Cloud Native Computing Foundation (CNCF) ecosystem has matured dramatically, and I think we’re finally at the point where you can build enterprise-grade applications using entirely open-source tools without compromising on security, scalability, or developer experience.

The Data Behind the Cloud-Native Revolution

Let’s talk numbers for a moment, because the growth trajectory here is genuinely mind-blowing. According to recent studies, the CNCF now supports over 30 major open-source projects that collectively power millions of production workloads globally. GitOps alone has cultivated massive communities around projects like Argo and Flux, indicating that developers are increasingly embracing declarative infrastructure management.

But here’s what most people don’t realize: the surge isn’t just about adoption rates – it’s about economic necessity. Sources suggest that companies implementing proper cloud-native toolchains see infrastructure cost reductions of 20-40% within the first year. Industry data indicates that organizations using open-source tools for cloud development report 60% faster deployment cycles compared to proprietary alternatives.

The artificial intelligence integration is where things get really interesting. The AI supply chain market, valued at over $10 billion, is driving unprecedented demand for cloud-native infrastructure that can handle machine learning workloads, real-time data processing, and automated decision-making at scale. Companies like IBM, Google, Microsoft, and NVIDIA are heavily investing in this space, which translates to better tooling and more robust ecosystems for developers like us.

Now, this is where it gets interesting – the data shows that North America leads adoption with strict regulatory frameworks driving the need for transparent, auditable infrastructure. This regulatory pressure is actually accelerating open-source adoption because compliance teams can inspect every line of code, something impossible with black-box proprietary solutions.

The 5 Game-Changing Open-Source Tools

1. Kubernetes + Crossplane: The Multi-Cloud Control Plane

I’ll be honest – Kubernetes itself isn’t new, but the way we’re using it in 2025 absolutely is. Crossplane transforms Kubernetes into a multi-cloud control plane, allowing you to define and provision cloud infrastructure using Kubernetes-native Custom Resource Definitions (CRDs). This isn’t just orchestration; it’s infrastructure as code on steroids.

Key Features:

- Universal API for all cloud resources (AWS, GCP, Azure).

- GitOps-native infrastructure management.

- Composition engine for creating reusable infrastructure templates.

- Policy enforcement and compliance automation.

The pricing is beautifully simple: it’s free. Crossplane is a CNCF incubating project, which means enterprise-grade support without licensing fees. The only costs are your cloud resources and optional commercial support if you need it.

Best Use Cases:

In my experience, this shines for teams already standardized on Kubernetes who want to extend their skills to infrastructure management. I’ve seen companies use it to create self-service infrastructure platforms where developers can provision entire environments with a simple YAML file.

Real-world example: A fintech startup I worked with reduced its infrastructure provisioning time from 3 days to 30 minutes by implementing Crossplane. Their developers can now spin up complete staging environments that mirror production without touching the AWS console.

Limitations: The learning curve is steep if you’re not already comfortable with Kubernetes operators. Also, debugging infrastructure issues requires understanding both Kubernetes concepts and cloud provider quirks.

2. Terraform + Terragrunt: Infrastructure as Code Perfection

Here’s what I love about Terraform – it’s been the gold standard for infrastructure as code for years, and the ecosystem just keeps getting better. Combined with Terragrunt for configuration hygiene and Atlantis for GitOps workflows, you get a tool that can power scalable, policy-compliant multi-cloud infrastructure setups.

Key Features:

- Declarative syntax that’s human-readable.

- State management with locking and versioning.

- Extensive provider ecosystem covering virtually every cloud service.

- Plan and apply a workflow that shows exactly what will change.

The core tooling is completely open-source. Terraform Cloud offers enterprise features behind a paywall, but honestly, the open-source version handles most production needs beautifully.

Best Use Cases:

This is your go-to for managing large-scale infrastructure across multiple cloud providers. The modular approach means you can create reusable components that enforce organizational standards while giving teams flexibility.

Real-world example: An e-commerce platform managing infrastructure across AWS, GCP, and Azure uses Terraform modules to ensure consistent security policies. Their compliance team loves it because every infrastructure change goes through code review.

Pros and Limitations:

The state file management can be tricky in team environments, and large deployments sometimes run into provider API rate limits. But the maturity and community support more than make up for these minor inconveniences.

3. Serverless Framework: Microservices Made Simple

The Serverless Framework has been around since 2015, but the 2025 version is genuinely transformative. It eliminates infrastructure management complexity with an easy-to-read YAML syntax, letting you focus on writing code instead of wrestling with cloud provider consoles.

Key Features:

- Multi-cloud support (AWS, Azure, Google Cloud).

- Plugin ecosystem with hundreds of community extensions.

- Automatic scaling and event handling.

- Centralized dashboard for monitoring all your functions.

The framework itself is free, and deployment costs are just your cloud provider’s serverless pricing. Companies like Coca-Cola, Reuters, and Nordstrom rely on it for production workloads, which speaks to its enterprise readiness.

Best Use Cases:

Perfect for building APIs, microservices, and data processing workflows. The CI/CD integration is seamless, making it ideal for teams practicing continuous deployment.

Real-world example: A media company processes millions of images daily using Serverless Framework functions that automatically scale from zero to thousands of concurrent executions based on demand.

Challenges: Cold starts can still be an issue for latency-sensitive applications, and debugging distributed serverless applications requires different tooling and mindset than traditional applications.

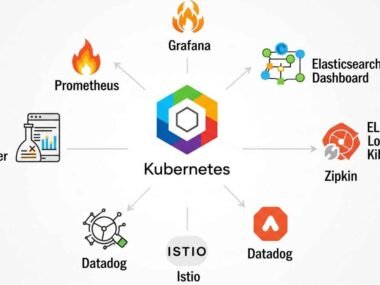

4. Prometheus + Grafana: Observability That Actually Works

Now, this is where most teams get observability wrong – they focus on collecting metrics instead of actionable insights. Prometheus is a CNCF graduated project that revolutionized how we think about monitoring cloud-native applications. Combined with Grafana for visualization, you get enterprise-grade observability without the enterprise price tag.

Key Features:

- Time-series database optimized for metrics collection.

- Powerful query language (PromQL) for complex aggregations.

- Service discovery that automatically finds new services.

- An alerting system that integrates with popular notification tools.

Both tools are completely open-source with optional commercial support. The operational costs are minimal – just the compute resources for your monitoring infrastructure.

Best Use Cases:

Essential for any cloud-native architecture, especially microservices, where understanding service interactions is crucial. The integration with Kubernetes is particularly elegant.

Implementation Reality:

Setting up Prometheus properly requires understanding its data model and query language. I’ve seen teams struggle with cardinality explosion when they instrument everything without thinking about storage implications.

5. Falco: Runtime Security for Cloud-Native

Security is often an afterthought in cloud-native deployments, but Falco changed that by becoming the “de facto Kubernetes threat detection engine”. It graduated from CNCF in February 2024, which means it’s reached full maturity and production readiness.

Key Features:

- Real-time threat detection at the kernel level.

- Rule-based alerting for suspicious behaviors.

- Container and Kubernetes-native integration.

- Low overhead monitoring that doesn’t impact performance.

Falco is completely free as a CNCF graduated project. The operational costs are minimal since it’s designed for efficient runtime monitoring.

Best Use Cases:

Critical for any production Kubernetes environment. It catches attacks that traditional security tools miss because it monitors actual system calls rather than network traffic.

Real-world Security:

I’ve seen Falco detect cryptomining attacks within minutes of container compromise. Traditional security tools would have missed these because the attackers used legitimate container images with malicious payloads.

Implementation Strategy: Getting Started Without Getting Overwhelmed

Here’s the truth: trying to implement all these tools simultaneously is a recipe for disaster. I’ve watched too many teams burn out attempting big-bang transformations that never quite work.

Start with infrastructure as code using either Terraform or Crossplane, depending on your Kubernetes maturity. Get comfortable with declarative infrastructure before adding complexity. The key is choosing tools that align with your team’s existing skills – if you’re already deep into Kubernetes, Crossplane makes sense. If you’re cloud-provider agnostic, start with Terraform.

Integration considerations are crucial. These tools work beautifully together, but each has its own configuration paradigms and operational requirements. Plan for at least 2-3 months to get comfortable with any new tool in production.

Common setup mistakes I see repeatedly:

- Over-engineering the initial implementation – start simple and evolve.

- Ignoring backup and disaster recovery for tool configurations.

- Not establishing clear ownership for tool maintenance and upgrades.

- Skipping security hardening because “it’s just internal tooling”

Timeline expectations: Plan for 6-12 weeks to see meaningful productivity improvements from any single tool. The learning curve is real, but the long-term benefits compound dramatically.

Budget planning is straightforward with open-source tools – your costs are primarily compute resources and optional commercial support. I typically recommend budgeting 10-15% of your cloud spend for monitoring and tooling infrastructure.

Measuring Success: Beyond Vanity Metrics

The metrics that actually matter aren’t the ones most teams track. Sure, deployment frequency and lead time are important, but I’m more interested in developer productivity and system reliability under stress.

Key metrics to track:

- Mean Time to Recovery (MTTR) from incidents.

- Infrastructure drift detection and remediation time.

- Developer velocity is measured by feature delivery, not code commits.

- Cost per workload across different environments.

ROI calculation methods should include both hard savings (reduced infrastructure costs, faster incident resolution) and soft benefits (developer satisfaction, reduced cognitive load). According to recent studies, teams using proper cloud-native toolchains report 40% less time spent on operational tasks.

When to scale or switch tools becomes obvious when you start hitting limitations that impact business outcomes. If your monitoring system can’t handle your metric volume, or your deployment pipeline becomes a bottleneck, it’s time to evolve.

Long-term optimization strategies focus on automation and intelligence. The goal isn’t just to manage complexity – it’s to eliminate it through better tooling and practices.

The Path Forward

The transformative potential of these open-source tools for cloud-native developers isn’t just about technology – it’s about fundamentally changing how we think about infrastructure, security, and developer productivity. The artificial intelligence revolution in supply chain management demands infrastructure that can scale intelligently and adapt to changing requirements without manual intervention.

My recommendation? Start with one tool that addresses your biggest current pain point. If deployments are slow and error-prone, begin with Terraform. If you can’t see what’s happening in production, implement Prometheus and Grafana first. The key is building confidence and expertise before expanding your toolkit.

The cloud-native ecosystem has reached a maturity level where small teams can build systems that rival enterprise platforms from just a few years ago. That’s not just exciting – it’s game-changing for anyone building software in 2025 and beyond.