Here’s a statistic that might make you pause mid-coffee: companies adopting serverless architecture report an average 20-30% reduction in infrastructure costs within the first year, according to recent AWS enterprise surveys. Yet, I keep running into executives who treat serverless like it’s some experimental tech that’s “nice to have” rather than a legitimate business strategy. The truth is, while your competitors are debating whether serverless is ready for prime time, early adopters are already banking the savings and scaling faster than ever.

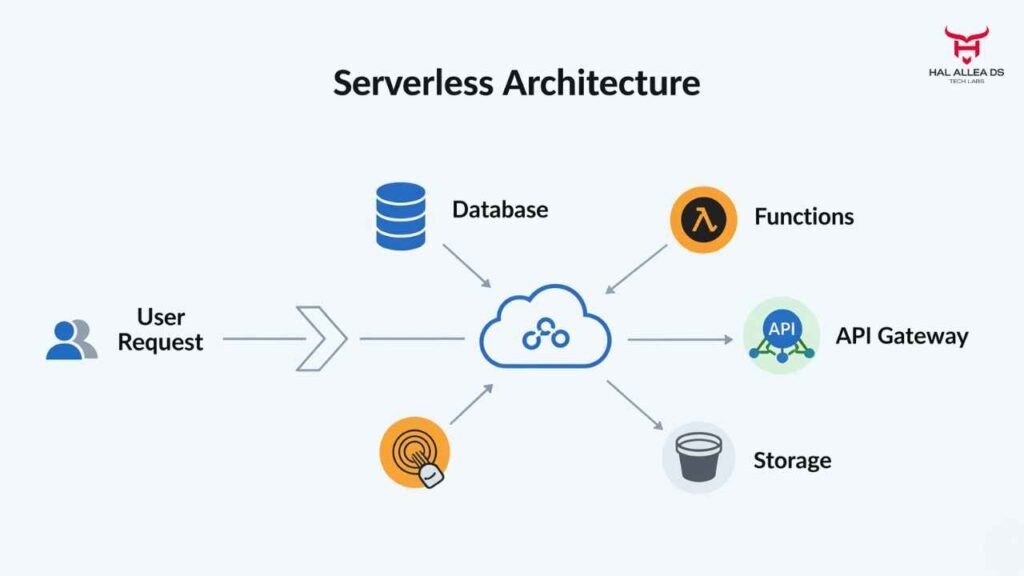

The core problem isn’t technical—it’s perception. Most businesses are still stuck in the traditional mindset of managing servers, predicting capacity, and paying for idle resources. This affects everyone from CTOs wrestling with unpredictable scaling costs to CFOs watching infrastructure budgets balloon during traffic spikes. Marketing teams launching campaigns worry about server crashes, while development teams spend more time managing infrastructure than building features.

Here’s the thing, though: serverless isn’t just about cutting costs. It’s about fundamentally changing how you think about business agility and resource allocation.

The Data Behind the Serverless Revolution

Let’s talk numbers, because I know that’s what gets executive attention. The serverless market isn’t just growing—it’s exploding. Industry data indicates the global serverless architecture market is projected to reach $36.84 billion by 2028, growing at a compound annual growth rate of 23.4%. That’s not startup growth; that’s enterprise adoption at scale.

But here’s what most people don’t realize about these numbers. When AWS reports that Lambda processes over 10 trillion requests per month, we’re not talking about toy applications. These are mission-critical workloads running everything from real-time data processing pipelines to customer-facing web applications. Microsoft Azure serverless functions are powering enterprise applications that handle millions of transactions daily.

The ROI data gets even more compelling when you dig deeper. Sources suggest that companies using Function-as-a-Service (FaaS) platforms see 60-80% reduction in operational overhead. That’s not just server costs—that’s the hidden expenses of system administration, capacity planning, and infrastructure maintenance that eat into your bottom line every quarter.

Now, this is where it gets interesting. Traditional EC2 instances run 24/7 whether you’re using them or not. With serverless, you literally pay only for the milliseconds your code runs. During a recent project with a client in the e-commerce space, their Lambda functions processed Black Friday traffic spikes automatically while their traditional infrastructure would have required weeks of capacity planning and significant upfront investment.

The event-driven architecture model means your applications respond to actual demand, not predicted demand. That’s the difference between paying for a full-time security guard versus one who only shows up when the alarm goes off.

Five Serverless Tools That Deliver Measurable ROI

1. AWS Lambda: The Foundation Layer

Lambda isn’t just another cloud service—it’s the Swiss Army knife of serverless computing. At its core, Lambda lets you run code without provisioning servers, automatically scaling from zero to thousands of concurrent executions.

Key Serverless Architecture Benefits:

- Automatic scaling with zero configuration.

- Built-in fault tolerance and high availability.

- Integration with the entire AWS ecosystem.

- Support for multiple programming languages, including Node.js

Pricing Structure: You pay $0.20 per 1 million requests plus compute time ($0.0000166667 per GB-second). Most small applications run for under $5 monthly, while enterprise workloads scale proportionally.

Best Use Cases: API gateways, real-time data processing, microservices backends, and event-driven workflows. I’ve seen companies use Lambda to process customer uploads, send automated email campaigns, and handle payment webhooks seamlessly.

Real-world Implementation: A logistics company I worked with replaced its traditional job processing system with Lambda functions. Result? Their batch processing costs dropped 70% while processing speed increased threefold. The functions automatically scaled during peak shipping seasons without any manual intervention.

Pros and Limitations: The 15-minute execution limit means Lambda isn’t suitable for long-running processes, but honestly, if your function takes longer than that, you probably need to rethink your architecture anyway.

2. Azure Functions: Microsoft’s Enterprise Play

Azure Functions brings serverless computing to the Microsoft ecosystem, and for companies already invested in Azure infrastructure, the integration benefits are substantial.

Key Serverless Architecture Benefits:

- Seamless integration with Office 365 and Dynamics.

- Built-in authentication and authorization.

- Support for both consumption and premium plans.

- Visual Studio integration for .NET developers.

Pricing Structure: Similar consumption model to the Lambda consumption model, with additional premium plans starting at $168/month for enhanced performance and VNET connectivity.

Best Use Cases: Perfect for enterprises running SharePoint workflows, Teams integrations, and Power Platform automations. The tight integration with Azure services like Synapse and SQL makes it ideal for data pipeline automation.

Real-world Implementation: A manufacturing client used Azure Functions to automate their supply chain alerts. When inventory levels hit specific thresholds, functions automatically trigger purchase orders through their ERP system. The entire process, which previously required manual oversight, now runs without intervention.

Pros and Limitations: The Microsoft ecosystem lock-in can be limiting if you’re planning multi-cloud strategies, but for Azure-native companies, the integration benefits far outweigh this concern.

3. Google Cloud Functions: The AI-Forward Approach

Google’s serverless offering shines particularly bright when you’re dealing with machine learning workflows or need tight integration with Google’s AI services.

Key Serverless Architecture Benefits:

- Native integration with TensorFlow and AI Platform.

- Automatic scaling with Google’s global network.

- Built-in observability and monitoring.

- Strong support for container-based deployments.

Pricing Structure: Pay-per-use model similar to other providers, with a generous free tier (2 million invocations monthly).

Best Use Cases: Image processing, natural language processing, IoT data ingestion, and any application requiring Google’s AI services. The integration with BigQuery makes it powerful for real-time analytics.

Real-world Implementation: An e-commerce platform used Cloud Functions to automatically categorize product images using Google’s Vision API. What previously required manual tagging now happens automatically at upload, improving search accuracy while reducing operational costs by 40%.

Pros and Limitations: Less enterprise-focused than AWS or Azure, but the AI integration capabilities are unmatched if that’s your primary use case.

4. Serverless Framework: The Developer Experience Game-Changer

Here’s where things get practical. The Serverless Framework isn’t a cloud provider—it’s the deployment and management layer that makes serverless architecture actually manageable at enterprise scale.

Key Serverless Architecture Benefits:

- Multi-cloud deployment from a single configuration.

- Infrastructure as code with automatic rollbacks.

- Built-in monitoring and alerting.

- Plugin ecosystem for extended functionality.

Pricing Structure: Open source core with enterprise features starting at $25/developer/month. The ROI comes from reduced deployment complexity, not the tool cost.

Best Use Cases: Managing complex microservices architectures, multi-environment deployments, and teams that need consistent deployment practices across different cloud providers.

Real-world Implementation: A fintech startup used Serverless Framework to deploy identical applications across AWS and Azure for regulatory compliance. The framework reduced their deployment complexity by 60% while maintaining consistency across environments.

Pros and Limitations: The learning curve can be steep for teams new to infrastructure as code, but the long-term maintainability benefits are significant.

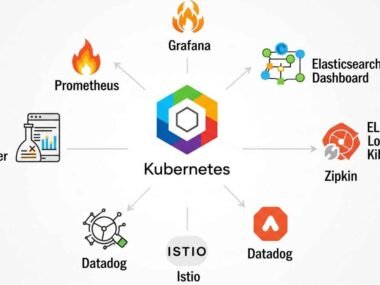

5. Kubernetes with Knative: The Hybrid Approach

For organizations already invested in Kubernetes, Knative brings serverless capabilities to existing container orchestration infrastructure.

Key Serverless Architecture Benefits:

- Runs on existing Kubernetes clusters.

- Scale-to-zero capabilities for cost optimization.

- Custom resource definitions for complex workflows.

- On-premises or multi-cloud flexibility.

Pricing Structure: No additional licensing—you pay for the underlying Kubernetes infrastructure, but optimize utilization through scale-to-zero.

Best Use Cases: Organizations with existing Kubernetes expertise, hybrid cloud requirements, or regulatory constraints requiring on-premises deployment options.

Real-world Implementation: A healthcare provider used Knative to process patient data while maintaining HIPAA compliance on their existing Kubernetes infrastructure. The scale-to-zero feature reduced their compute costs by 45% during off-hours.

Pros and Limitations: Requires significant Kubernetes expertise and operational overhead, but provides maximum flexibility for complex enterprise requirements.

Implementation Strategy: Getting Serverless Right

Choosing the right serverless architecture approach isn’t about picking the coolest technology—it’s about matching capabilities to business requirements. I think the biggest mistake I see organizations make is trying to go “all-in” on serverless from day one.

Start with the Edge Cases: Begin with applications that have unpredictable traffic patterns or clear event-driven workflows. API gateways, webhook handlers, and batch processing jobs are perfect starter projects. These typically show immediate ROI and help your team build serverless expertise without risking core business systems.

Integration Considerations: Here’s the thing, though serverless doesn’t exist in isolation. Your functions need to talk to databases, third-party APIs, and existing systems. Plan your networking, security, and monitoring strategy before you deploy your first function. I’ve seen too many projects stall because teams didn’t consider how Lambda functions would access their private databases or handle API authentication.

Common Setup Mistakes to Avoid: Don’t try to lift-and-shift existing applications to serverless. Monolithic applications need to be decomposed into smaller, stateless functions. Also, resist the urge to create one giant function that does everything—that’s just a server without the server management benefits.

Timeline Expectations: Honestly, expect 3-6 months to see meaningful results from serverless adoption. The first month is learning and experimentation, months 2-4 are building and refining your first production workloads, and months 5-6 are where you start seeing the operational efficiency gains that drive ROI.

Budget Planning: Start with a pilot budget of $5,000-$15,000 for experimentation and training. The actual serverless costs will likely be lower than your current infrastructure spend, but factor in learning curve time and potential consulting costs if your team needs external expertise.

Measuring Success: ROI Metrics That Matter

The truth is, measuring serverless ROI requires looking beyond simple cost comparisons. Yes, you’ll likely see immediate infrastructure cost reductions, but the real value comes from operational efficiency and business agility.

Key Metrics to Track:

- Infrastructure cost per transaction: This is your baseline serverless benefit.

- Time to market for new features: Serverless typically reduces deployment friction.

- Operational overhead hours: Track time spent on server management vs. feature development.

- Scaling response time: How quickly your applications handle traffic spikes.

- Developer productivity: Lines of code deployed per developer per sprint.

ROI Calculation Methods: In my experience, the total cost of ownership calculation should include infrastructure costs, operational labor, and opportunity costs of delayed features. A typical enterprise sees 25-40% TCO reduction in the first year, with increasing benefits as the team becomes more proficient.

When to Scale or Switch Tools: If you’re consistently hitting platform limits (like Lambda’s 15-minute timeout), it’s time to consider hybrid approaches or different tools. But don’t switch tools because of isolated edge cases—architect around the limitations first.

Long-term Optimization Strategies: The real ROI comes from architectural improvements that serverless enables. Event-driven architectures, automatic scaling, and pay-per-use pricing compound over time. Organizations that embrace these patterns see accelerating returns in years 2-3.

Now, this is where it gets interesting from a business perspective. The metrics that matter most to executives aren’t always the technical ones. Focus on business impact: faster feature delivery, improved customer experience during traffic spikes, and reduced time spent on infrastructure firefighting.

The Bottom Line on Serverless ROI

The transformation potential of serverless architecture isn’t theoretical anymore—it’s proven across industries and company sizes. From startups handling unpredictable growth to enterprises optimizing decades-old infrastructure, serverless delivers measurable business value when implemented thoughtfully.

That said, serverless isn’t a magic solution that fixes all infrastructure problems. It’s a tool that, when used correctly, fundamentally changes how you think about building and scaling applications. The companies seeing the biggest ROI are those that embrace the event-driven, stateless patterns that make serverless architectures powerful.

Ready to start? Pick one clear use case—maybe that batch job that runs twice a week or the API that gets hammered during marketing campaigns. Build a simple serverless implementation, measure the results, and use that success to build organizational confidence for larger initiatives. The key is starting small and scaling your expertise along with your infrastructure.

The serverless revolution is happening whether your organization participates or not. The question isn’t whether serverless will transform software infrastructure—it’s whether your company will be leading that transformation or scrambling to catch up.